Balancing Quality, Speed, and Innovation: Expert Insights on Modern Clinical Trials

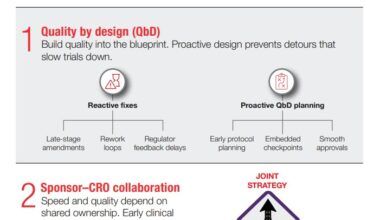

Discover how integrating quality from the outset can accelerate clinical trials, drive efficiency, and ensure reliable outcomes in today’s evolving drug development landscape.

In clinical development, the tension between speed and quality has long shaped how sponsors design and execute trials. But as the industry evolves, leaders are redefining what it means to deliver faster without compromise. This candid conversation explores how quality, when integrated from the outset, becomes a true accelerator rather than an obstacle.

Valerie Brown, vice president of global quality assurance and compliance at the PPD™ clinical research business of Thermo Fisher Scientific, shares how her team translates this philosophy into action. In the Q&A below, she discusses real-world examples of quality driving efficiency, the unique challenges of adaptive trial designs, strategies for seamless development-to-manufacturing transitions, and the importance of maintaining rigor as real-world evidence reshapes clinical research. Her perspective underscores a powerful truth: in modern drug development, quality isn’t a trade-off – it’s the pathway to speed, reliability, and sustained success.

Breaking the myth: Quality vs. speed

Q: In your blog post, “Quality is Speed,” you reframe the traditional trade-off between quality and acceleration. Could you share a real-world example where embedding quality from day one meaningfully expedited a study—rather than delayed it?

A: That’s a great question. I think about a Phase II oncology program where we built quality in from the protocol concept, not just the first patient in or first visit. Three things really helped enable that. One was protocol simplification by design. We cut 3 non-decision critical procedures and harmonized visit windows with the end point model. This dropped the avoidable deviations by about 35% in the first two months. There’s also a risk-based quality monitoring plan that we have in place that’s tied to our critical to quality factors.

With this we have predefined data quality signals like an out of window dosing, if there’s tumor assessment timing, and we monitor those centrally and daily. Lastly, there’s the systems and validation piece and edit checks. With the electronic data capture, we had targeted some critical to quality aligned edit checks and a clean hand off to the interactive response technology. By doing that, the query volume fell for each patient by about 30%, and we reached our last patient roughly six weeks earlier than the original plan – without increasing any monitoring days, without adding additional resources, or accepting a higher risk. So, the speed was the downstream effect of fewer reworks and cleaner first-time data. To get to that point, it’s about building that in from the beginning. Right at the protocol stage, so that we’re not trying to build the airplane while we’re flying it when we have a first patient in.

Q: Does this then lead to cost savings as well?

A: I think it does because if you think about it, you don’t have any rework. Whatever your cost was initially, that’s your set cost. But the fact that you were able to deliver seven weeks earlier gives an advantage to the sponsor in terms of getting their filing in, getting their getting their drug reviewed and approved and maybe onto the market faster. So, there’s a longer time in terms of some revenue generation that they may have in terms of that product.

Adaptive trial design and quality assurance

Q: Adaptive trials are gaining traction in the industry. What unique quality challenges do they introduce, and how does your team ensure data integrity and compliance while maintaining that built-in flexibility?

A: Anytime you talk about something that’s adaptive, it presents a challenge, right? Because adaptive designs mean that there’s more moving parts – more things that can go one way or the other. And most of the regulators want each move to be pre-specified control and traceable. So basically, you can have these moving parts, but you have to have a way that you are controlling that. With that in mind, we focus on things like an adaptation playbook, so the decision rules, the simulations, the spending plans that have to be finalized and archived before your first patient in. Any change that comes about needs to go through a formal change control so you can understand impact assessment and how this is going to affect the overall project.

Then, of course, you need operational firewalls. A lot of times when you talk about interim analysis or the interim analysis data, those teams are segregated and there’s a minimum need to know in terms of some of those signals that flow to the blinded team to avoid operational bias. Additionally, you’ll have version control across the arms. We must treat each adaptation – the arm drop, the ad, the sample size, re-estimation – like it’s a mini release. It all kind of gets its own little production if you will. There are control document updates, there’s targeted site retraining, and clear effective date tracking. And of course, from a traceability standpoint, you’ve got to have a line data structure, so the analysis is reproducible across all the adaptations. There has to be a single source of truth, but you also are making sure that it’s reproducible across adaptations. The bottom line is that you have to have something that is predefined or pre-specified. There needs to be controls in place or guardrails, and it has to be traceable. It has to be able to go back to your source of truth.

Q: It sounds like the key is that while people are looking for that flexibility, it has to be flexibility within an established set of boundaries or a really well thought out and documented set of guidelines so that you can still measure and control and reproduce that that flexible approach. Is that accurate?

A: Exactly. From a risk-based quality monitoring standpoint, you can have dashboards where things like screen rate spikes or visit timing slippage – anything that’s going to really affect how the trial progresses – you can see and assess. In the end, it’s all about being inspection ready so that then when the regulators come in, we’ve got this dossier that they’re going to be looking at that has all the simulations and decision memos, the interim blocks, the audit trails so that they can see one complete story.

Risk mitigation focus

Q: When CROs and CDMOs work in an integrated model, what steps are essential to reduce quality risks during the transition from clinical development to manufacturing?

A: That’s always been the age-old issue, right? It’s the hand off from development to manufacturing and more towards that commercial space. We need to manage it as a gated quality by design tech transfer and there’s got to be clear accountability. So basically, a joint quality agreement. Even if it’s within the same organization, the quality agreement is going to outline who’s going to do what and there’s a RACI – so who’s responsible, who’s accountable, who’s consulting, and who’s informed – and you define who those release authorities are, that way there’s ownership in terms of any deviations or variances. Then there have to be real-time escalation paths, so when things happen, it can be brought to the people who can make the decisions in real-time. Then from a manufacturing standpoint, they have roles in terms of lot dispositions – what material are we going to release? The big piece there I think from a transfer standpoint is the knowledge, and that’s where I think many of us in the industry kind of lose it. For example, there’s a process description, you have critical process parameters, critical quality attriattributes, and the change history. That’s where people kind of fall short of that. But that has to all be organized so that you can retrieve it really rapidly.

From an analytical standpoint, how ready are your analytical methods? They need to be stage appropriate, qualified, validated, cross-site method transfers, comparability protocols in order to avoid any late surprises with the specifications. Once upon a time it was thought that you don’t have to validate the methods until you get to maybe Phase III. Now all of that’s done really early development in Phase I as well. There’s also the agreed data format to consider, so data inoperability and a clear, secure exchange of information like the batch records, the certificate of analysis, any environmental monitoring things, contamination control, all those things – especially if you’re in a sterile field finish and it has to be supported with metadata that supports that whole genealogy. You have to be able to trace it back from the very beginning.

Change management and risk review are also critical, because we need to run those prospective risk assessments. You can use any risk assessments for the scale up, any raw material changes and equipment differences, and then of course the release and label readiness. There has to be a clear investigational medicinal product, which is what we use in clinical research versus the commercial control. I think a lot of folks try to apply commercial rigor to some of the things that we’re doing in research, and it really doesn’t work. When you’re looking at artwork, you look at the country specific labeling, and any of those things that will make sure that the release and the label readiness is there and then of course mark recalls and deviation drills.

Mark recalls are to prove that the signal to action chain works. For example, if something happens – even in the clinical setting before you get to commercial – what are the triggers and how do we get that before that first engineering batch from commercial or from the CDMO goes out? So catching the issue during the engineering lot, which is beyond proof of concept, it’s like a pilot batch if you will, until they get to the PPQ batch, which is the batch that they would say this is how we’re going to operate in a normal environment and this is what we’re going to be doing in a commercial standpoint going forward.

There also has to be parallel readiness reviews, so independent QA release, plus the technical go/no go decision will ensure speed without bypassing quality. The outcome – if you’re doing this the right way with all these different things – is going to be fewer late revalidations. Sometimes in manufacturing they may have to revalidate something, you’re going to have a cleaner batch release and then you’re going to have smoother regulatory Q&A’s because the story across clinical and the CDMO is going to be consistent and well documented. A clear handoff is critical so that anything you find out in clinical research is transferred and shared – that’s part of the whole knowledge transfer package.

Q: Are there any benefits from a quality perspective that exist by going through this process under the guise of one company versus handing this off from the clinical development phase to a different company in the manufacturing phase?

A: On its face, one would think that being under the same if you’re in the same company that’s probably ideal, right? It should be a lot easier because a lot of the things that are being worked on like the process description, understanding what your critical to quality attributes are, and then from materials standpoint what are your critical process parameters that should be well understood and easily available. But I think that the biggest advantage there would be that as you’re putting in this joint quality agreement where you’re defining who’s going to do what and who’s going to be responsible for what – you’ve got some real-time lines into who those individuals are and you can work with them on a regular basis. So, from a RACI standpoint, you know who’s responsible. You also have access to information in terms of how mature the methods are. You can have access to stability data; you’ve got access to change history. And of course, all of that can be well organized for everybody to look at.

Real-world evidence (RWE) as a quality lens

Q: Real-world data is becoming central to modern clinical programs. How do you approach maintaining rigorous quality control when leveraging RWE—especially in decentralized or pragmatic trial models?

A: You have to treat real-world evidence and real-world data just like any other GXP data stream. It’s not different, it’s not separate. You have to have the controls in place, and you have to make sure that it’s fit for purpose. So there has to be mapping. You have to have data source systems, linkage methods, refresh cycles, and understand who is permitted to use them. For the fit for purpose assessment, you have to have pre-specified accuracy, complete lists, timelessness, and concordance thresholds. You have to run pilots to quantify what’s missing and what’s maybe misclassified. And within that, in terms of real-world evidence and real-world data, is the validation piece for the computerized system. So, you’re talking about 21 CFR part 11, which is a line for e-data and e-sources. It could be for applications; it could be for pipelines; it could be for wearables. But you have to maintain an audit trail for the system that you’re using and for the data that it is capturing, because the big thing that regulators are looking for is changing data. Is there an opportunity for the data to change, and is what you have in your source the same as what’s in the system?

We talked about privacy preserving, so using tokens, you know the HIPAA compliant methods and then of course bias and confounding control. You want to partner with biostats to pre-specify some of these adjustment methods, and then QA will verify the inputs and the traceability of any variances, deviations, events or issue events that come out of that.

As it pertains to decentralized operations, central monitoring will flag that data if it’s latent it can look at device non-adherence or if it’s non-compliant and then be able to really specify any outliers that happen so that we can have rapid issue management and mitigation that will keep the protocol intact so that you’re not jeopardizing what you have outlined inserting in your study plan or in your protocol.

So real-world data and real-world evidence are treated just like any other GXP data stream because people are making decisions about that based on what they get.

Quality and speed: stronger together

As Valerie’s insights make clear, speed and quality are no longer competing priorities; they’re interconnected measures of clinical excellence. From early design through data delivery, the most successful development programs embed quality into every decision, creating the foundation for faster, more reliable outcomes. By aligning strategy, systems, and science from the start, sponsors can accelerate with confidence and deliver results that stand up to both regulatory and patient expectations.

Ready to accelerate without compromise?

Explore how embedding quality from day one can help you deliver smarter, faster, and with greater confidence.

Recommended for you